Meta and TikTok share approaches for 2022 US midterm elections

Meta and TikTok have shared their policies for safeguarding communication about the upcoming 2022 midterm elections.

The Facebook owner described its approach this year as applying “learnings from the 2020 election cycle and exceeds the measures we implemented during the last midterm election of 2018”.

Similarly, TikTok, which has received ascendant popularity in the past few years, has focused on developing its own ways to combat election misinformation and learning lessons from the last cycle.

Meta will enable “advanced security operations to fight foreign interference and domestic interference campaigns”, as well as increased fact-checking, transparency measures, and new unspecified proactive threat detection measures to “help keep poll workers safe”.

Poll workers, particularly in key battleground states, have received unprecedented threats as the Republican party has continued to spread lies about the outcome of the 2020 presidential election.

As it did in 2020, Meta will have a dedicated team in place to “combat election and voter interference while also helping people get reliable information about when and how to vote”.

TikTok, meanwhile, will institute labels on content related to elections and connect its community to “authoritative information”.

TikTok, operated by Chinese-owned ByteDance, has increasingly been viewed as a “national security threat” by government officials for concerns over data privacy.

The midterm election cycle is already in full-swing—just seven states (Florida, New York, Massachusetts, Delaware, New Hampshire, Rhode Island, and Louisiana) have yet to host their primary elections ahead of the midterm election day on Tuesday, November 8.

Restrictions on advertisements

TikTok explicitly disallows paid political advertising on its platform—including content made by creators—and prohibits “content including election misinformation, harassment—including that directed towards election workers—hateful behavior, and violent extremism”. The company says it will enforce its policies using “a combination of people and technology” through partnerships with independent intelligence firms, but did not give further details on enforcement mechanisms.

Steps Facebook has taken and will continue to take, Meta said, include banning more than 270 white supremacist organizations from the platform and removing 2.5 million pieces of content tied to hate speech globally just in Q1 2022.

Facebook has come under fire in recent weeks for continuing to serve ads on white supremacy group searches even after purporting to ban such groups. It has also been criticized for running advertisements that share health misinformation, including and especially relating to abortion pills following the overturning of abortion rights in June.

Election-related content Meta will remove from its social platforms includes misinformation about dates, locations, times, and methods of voting; misinformation about who can vote, whether a vote will be counted, and qualifications for voting; and calls for violence related to voting, voter registration, or the administration or outcome of an election.

Meta also stated it would reject ads encouraging people not to vote or calling into question the legitimacy of the upcoming election.

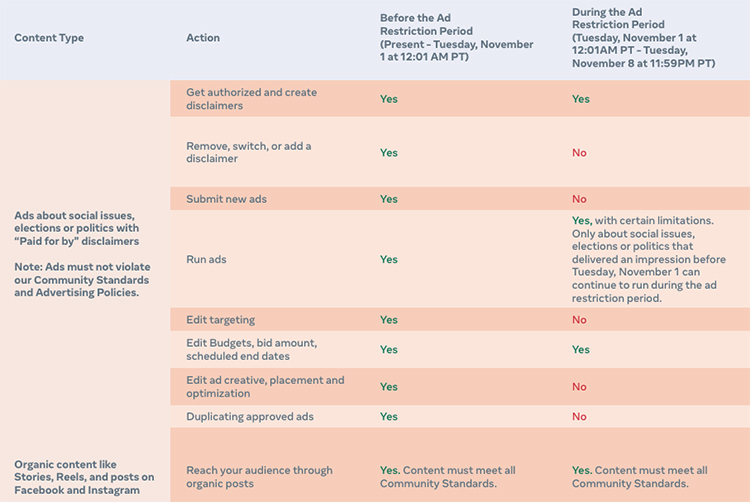

In addition, it will institute a prohibition on new political, electoral, and social issue advertisements during the final week of the election campaign.

Facebook took a similar step in 2020 but is attempting to clarify and simplify the process this time. Though ads that have run before the restriction period will still be allowed to run, any edits related to creative, placement, targeting, and optimization will not be permitted during the restriction period.

A spokesperson for Meta wrote: “Our rationale for this restriction period remains the same as 2020: in the final days of an election, we recognize there may not be enough time to contest new claims made in ads. This restriction period will lift the day after the election and we have no plans to extend it”.

Attempts at increased reach and transparency

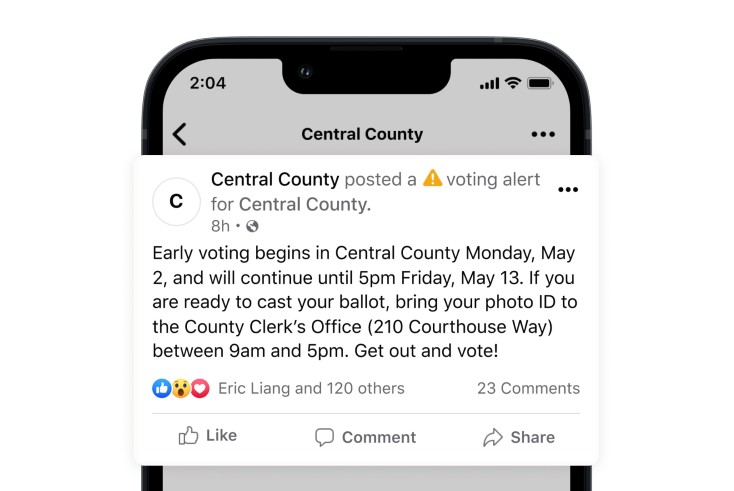

On Facebook, users will again receive reminders directing them to information on how and when to register to vote. Facebook also said it would take steps to elevate post comments from local elections officials.

On Instagram, users will see the return of “I Voted” and “Register to Vote” stickers, among others, with the goal of encouraging voting.

Meta also provides the public with three key transparency features: the Ad Library (which stores political ads for the past seven years), the Ad Library Report, which tracks political adspend, and new information about advertiser targeting choices.

In an attempt to better reach a broader audience, Facebook will show election-related notifications in a second language other than English “if we think the second language may be better understood” (e.g., if Facebook detects a user interacts with content primarily in Spanish even if their language settings are set to English).

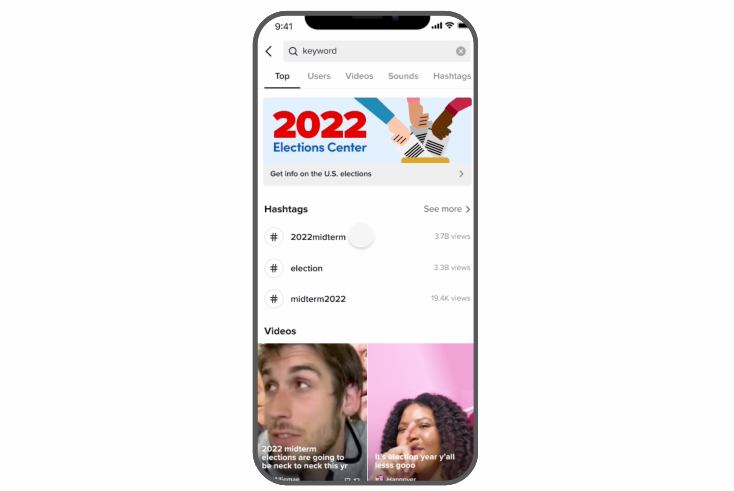

TikTok will institute similar practices, including the debut of an Elections Center page “to connect people who engage with election content to authoritative information and sources in more than 45 languages, including English and Spanish”.

TikTok will also add content labels that will allow users to click through to receive more information about elections in their state from the National Association of Secretaries of State, Ballotpedia, and other partners.

Ramped up fact-checking

As part of a $5m investment in fact-checking and media literacy initiatives, Meta has partnered with Univision and Telemundo to launch fact-checking services on WhatsApp.

Meta employs 10 different fact-checking partners related to US elections, including five who cover content in Spanish. They will add warning labels to debunked content.

TikTok also stated that it partners with “accredited fact-checking organizations”. While said organizations do not moderate content on the platform, “their assessments provide valuable input which helps us take the appropriate action in line with our policies”.

While content is being fact-checked by TikTok’s moderators, or when content cannot be substantiated, TikTok flags it as ineligible for recommendation into its For You feed.

Critics of Meta, TikTok, and other social media platforms have held that the companies’ attempts at tackling disinformation have been “hollow promises for favorable publicity“.

In 2020, Meta added labels to content discussing the integrity of the presidential election to connect people with more reliable information. However, in response to “feedback from users that these labels were overused […] in the event that we do need to deploy them this time round”, Meta will only do so in “a targeted and strategic way”.

Meta stated it has invested “a huge amount” in attempting to protect the integrity of elections, including in non-US election years. The company highlighted $5bn in expenditure on this in the last year alone.