Baroness Kidron: Social media 'talk a good talk' on safety but fight regulations in court

“The problem with digital advertising is not the content of advertising…. The problem is that in the pursuit of the advertiser’s audience, the design of products and services is entirely geared to hold attention, snap up data, and amplify networks. The pursuit of that holy grail creates unacceptable outcomes for kids.”

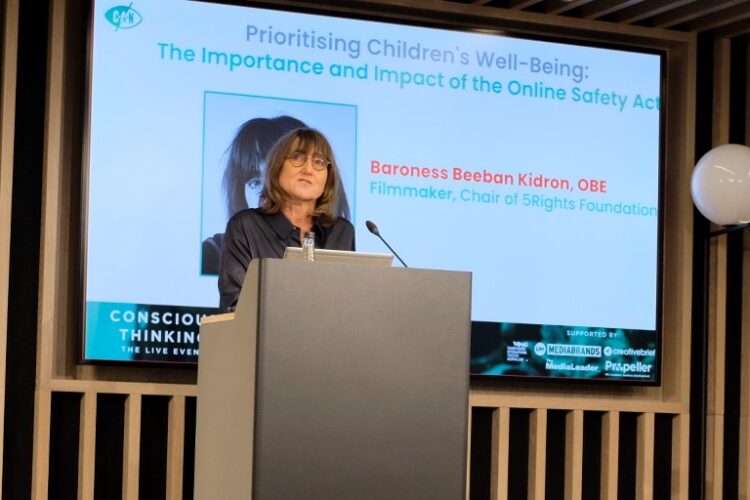

Baroness Beeban Kidron OBE, speaking at the Conscious Advertising Network’s (CAN) Conscious Thinking Live event in London yesterday, discussed the importance of the recently passed Online Safety Act.

A former film director, Kidron has become a global force over the past decade in pushing for social media regulation to protect children from harms on platforms like Facebook, Instagram, TikTok, and YouTube. That includes the passing of the Online Safety Bill in the UK, which is now being implemented by broadcast and telecoms regulator Ofcom.

The Act, which was proposed back when David Cameron was Prime Minister and survived the tumult of successive post-Brexit governments, made an “important distinction,” according to Kidron. That’s because it recognises the product design of social media sites — not just instances of harmful content being posted by individual users — is at fault for harms caused to young users.

Such an argument is core not only to the Online Safety Act, but also to a recent lawsuit brought by 42 US states against Meta, which accuses the company of knowingly designing its social media products to addict children, drawing comparisons to Big Tobacco.

A distinction between social and traditional media

Kidron warned that exceptions for tech companies should not be tolerated by Government if they flaunt the Online Safety Act, and noted that while social media companies “talk a good talk” on safety, they stridently fight all attempts at regulations in court.

She described a meeting with “one of the most senior people in the tech ecosystem” in Silicon Valley who, presumably unhappy with her regulatory efforts, with “smug arms” told her she was “the type of person who just doesn’t like advertising.”

Nine in 10 editors: Google and Meta are ‘existential threat’ to journalism

Paraphrasing her response, and drawing a distinction between social media and traditional media, Kidron said: “The difference is, the bus does not sense the child’s gaze and follow them down the road.

“[The bus] does not identify that they are between 13- to 17-years-old, home on a Friday night, and then send commercials for cosmetic surgery on the basis that a teen at home on a Friday is unhappy with their body.

“The TV does not tell other people in the child’s network that they have their attention on something, and then bombard them with an item, whether its links to scammers or sites which can convince people to hang themselves in their own wardrobe.

“Every single one of those things was happening on the platform that he represented. And that is not okay.”

Despite Kidron’s success at passing the Online Safety Bill, she admitted she was “hugely disappointed” the act was limited to protecting children. Doing so was a pragmatic decision to get such a law passed, but Kidron noted that adults are susceptible to the harms of social media as are under-18s.

‘Star struck’

The Conscious Thinking Live event saw delegates attempt to reconcile the questionable ethics of working in the media and advertising industry as it supports social media platforms and select media owners that promote hate speech and mis- and disinformation.

Apart from Big Tech, the Daily Mail and GB News were regularly referenced as having promoted harmful rhetoric, especially targeting the trans community.

CAN itself drew ire from GB News earlier this year, with public relations professional Richard Hillgrove saying it was “destroying society” on Nigel Farage’s programme. CAN co-founder and co-chair Jake Dubbins addressed the tumult at the Conscious Thinking Live event, saying the coverage was a “pretty constant misrepresentation of what we’re trying to do.”

Later in the afternoon, The Media Leader heard from agency attendees about the difficulty in trying to convince certain brands to not advertise against online platforms.

One agency representative said that C-suite leaders at brands often have preferences for certain social media platforms that cannot be changed despite offering evidence showing the environment is not brand safe or effective at fulfilling key performance indicators.

Instead, CEOs and CMOs often demand to advertise on platforms like TikTok, Instagram, or X on the basis that their own child is on the platforms all the time, according to agency delegates.

“At the end of the day, if a client wants to advertise on a platform, we will buy it for them,” admitted one attendee.

Another agency representative described how one of their clients was “star struck” after meeting X owner Elon Musk in person earlier this year, and that they were reassured by Musk to spend on X despite the recommendation from their agency to avoid the platform.

Musk has repeatedly used his gravity on X to endorse overtly antisemitic conspiracy theories. Tech company IBM made headlines yesterday for suspending its advertising on the microblogging site after its ads appeared next to posts praising Adolf Hitler and Nazism.

‘Those with economic influence need to be stepping in’

Meta, meanwhile, has said it will allow political advertising to run on its platforms Facebook and Instagram that questions the result of the 2020 presidential election.

Alexandra Pardel (pictured, above), the campaigns director at Digital Action, a civic organisation that seeks to tackle Big Tech’s “harms to democracy and human rights,” took the stage to implore members of the audience to help lobby such companies to be more responsible.

But, as was discussed in a breakout session attended by The Media Leader at the event, there is currently no profit motive for Big Tech to act responsibly.

When asked by The Media Leader if advertisers should consider boycotting social platforms to affect change, Pardel noted that (in spite of the progress made in the UK on the Online Safety Act) political leaders — especially US political leaders — have failed to “live up to their responsibilities” to regulate the platforms. “That’s where financial power and market power is important. Investors, advertisers, those with economic influence need to be stepping in.”

“It doesn’t serve them for their ads to be running along hate speech, disinformation, and extremely harmful content that are undermining our democracies,” Pardel continued. “Social media companies don’t feel the pressure politically to invest in trust and safety. We need to do all we can to change that.”

Editor’s note: an earlier version of this article reported GB News presenter Nigel Farage said CAN was “destroying society”. That is incorrect; Richard Hillgrove made that comment on Nigel Farage’s show. The article has been amended to reflect that fact.